RL’s origins and historic context

RL copies a very simple principle from nature. The psychologist Edward Thorndike documented it more than 100 years ago. Thorndike placed cats inside boxes from which they could escape only by pressing a lever. After a considerable amount of pacing around and meowing, the animals would eventually step on the lever by chance. After they learned to associate this behaviour with the desired outcome, they eventually escaped with increasing speed.

Some of earliest AI researchers believed that this process might be usefully reproduced in machines. In 1951, Marvin Minsky, a student at Harvard who would become one of the founding fathers of AI, built a machine that used a simple form of reinforcement learning to mimic a rat learning to navigate a maze. Minsky’s Stochastic Neural Analogy Reinforcement Computer (SNARC), consisted of dozens of tubes, motors, and clutches that simulated the behaviour of 40 neurons and synapses. As a simulated rat made its way out of a virtual maze, the strength of some synaptic connections would increase, thereby reinforcing the underlying behaviour.

There were few successes over the next few decades. In 1992, Gerald Tesauro demonstrated a program that used the technique to play backgammon. It became skilled enough to rival the best human players, a landmark achievement in AI. But RL proved difficult to scale to more complex problems.

In March 2016, however, AlphaGo, a program trained using RL, won against one of the best Go players of all time, South Korea’s Lee Sedol. This milestone event opened again teh pandora’s box of research about RL. Turns out the key to having a strong RL is to combine it with deep learning.

Current usage and major methods of RL

Thanks to current RL research, computers can now automatically learn to play ATARI games, are beating world champions at Go, simulated quadrupeds are learning to run and leap, and robots learn how to perform complex manipulation tasks that defy explicit programming.

However, while RL saw its advancements accelerate, progress in RL has not been driven as much by new ideas or additional research as just by more of data, processing power and infrastructure. In general, there are four separate factors that hold back AI:

- Processing power (the obvious one: Moore’s Law, GPUs, ASICs),

- Data (in a specific form, not just somewhere on the internet – e.g. ImageNet),

- Algorithms (research and ideas, e.g. backprop, CNN, LSTM), and

- Infrastructure (Linux, TCP/IP, Git, AWS, TensorFlow,..).

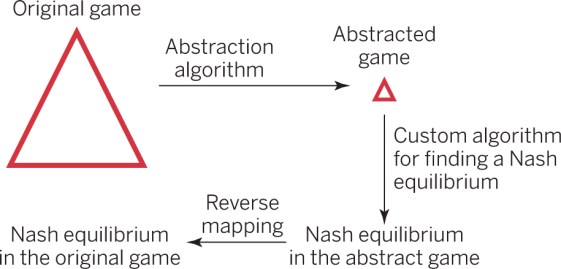

Similarly for RL, for example for computer vision, the 2012 AlexNet (deeper and wider version of 1990’s Convolutional Neural Networks – CNNs). Or, ATARI’s Deep Q Learning is an implementation of a standard Q Learning algorithm with function approximation, where the function approximator is a CNN. AlphaGo uses Policy Gradients with Monte Carlo tree search (MCTS).

RL’s most optimal method vs. human learning

Generally, RL approaches can be divided into two core categories. The first focuses on finding the optimum mappings that perform well in the problem of interest. Genetic algorithm, genetic programming and simulated annealing have been commonly employed in this class of RL approaches. The second category is to estimate the utility function of taking an action for the given problem via statistical techniques or dynamic programming methods, such as TD(λ) and Q-learning. To date, RL has been successfully applied in many real-world complex applications, including autonomous helicopter, humanoid robotics, autonomous vehicles, etc.

Policy Gradients (PGs), one of RL’s most used methods, is shown to work better than Q Learning when tuned well. PG is preferred because there’s an explicit policy and a principled approach that directly optimises the expected reward.

Before trying PGs (canon), it is recommended to first try to use cross-entropy method (CEM) (normal gun), a simple stochastic hill-climbing “guess and check” approach inspired loosely by evolution. And if you really need to or insist on using PGs for your problem, use a variation called TRPO, which usually works better and more consistently than vanilla PG in practice. The main idea is to avoid parameter updates that change the policy dramatically, as enforced by a constraint on the KL divergence between the distributions predicted by old and the new policies on data.

PGs, however have few disadvantages: they typically converge to a local rather than a global optimum and they display inefficient and high variance while evaluating a policy. PGs also require lot of training samples, take lot of time to train, and are hard to debug debug when they don’t work.

PG is a fancy form of guess-and-check, where the “guess” refers to sampling rollouts from a current policy and encouraging actions that lead to good outcomes. This represents the state of the art in how we currently approach RL problems. But compare that to how a human might learn (e.g. a game of Pong). You show him/her the game and say something along the lines of “You’re in control of a paddle and you can move it up or down, and your goal is to bounce the ball past the other player”, and you’re set and ready to go. Notice some of the differences:

- Humans communicate the task/goal in a language (e.g. English), but in a standard RL case, you assume an arbitrary reward function that you have to discover through environment interactions. It can be argued that if a human went into a game without knowing anything about the reward function, the human would have a lot of difficulty learning what to do but PGs would be indifferent, and likely work much better.

- A human brings in a huge amount of prior knowledge, such as elementary physics (concepts of gravity, constant velocity,..), and intuitive psychology. He/she also understands the concept of being “in control” of a paddle, and that it responds to your UP/DOWN key commands. In contrast, algorithms start from scratch which is simultaneously impressive (because it works) and depressing (because we lack concrete ideas for how not to).

- PGs are a brute force solution, where the correct actions are eventually discovered and internalised into a policy. Humans build a rich, abstract model and plan within it.

- PGs have to actually experience a positive reward, and experience it very often in order to eventually shift the policy parameters towards repeating moves that give high rewards. On the other hand, humans can figure out what is likely to give rewards without ever actually experiencing the rewarding or unrewarding transition.

In games/situations with frequent reward signals that requires precise play, fast reflexes, and not much planning, PGs quite easily can beat humans. So once we understand the “trick” by which these algorithms work you can reason through their strengths and weaknesses.

PGs don’t easily scale to settings where huge amounts of exploration are difficult to obtain. Instead of requiring samples from a stochastic policy and encouraging the ones that get higher scores, deterministic policy gradients use a deterministic policy and get the gradient information directly from a second network (called a critic) that models the score function. This approach can in principle be much more efficient in settings with high-dimensional actions where sampling actions provide poor coverage, but so far seems empirically slightly finicky to get working.

There is also a line of work that tries to make the search process less hopeless by adding additional supervision. In many practical cases, for instance, one can obtain expert trajectories from a human. For example AlphaGo first uses supervised learning to predict human moves from expert Go games and the resulting human mimicking policy is later fine-tuned with PGs on the “real” goal of winning the game.

RL’s new frontiers: MAS, PTL, evolution, memetics and eTL

There is another method called Parallel Transfer Learning (PTL), which aims to optimize RL in multi-agent systems (MAS). MAS are computer systems composed of many interacting and autonomous agents within an environment of interests for problem-solving. MAS have a wide array of applications in industrial and scientific fields, such as resource management and computer games.

In MAS, as agents interact with and learn from one another, the challenge is to identify suitable source tasks from multiple agents that will contain mutually useful information to transfer. In conventional MAS (cMAS), which are optimal for simple environments, actions of each agent are pre-defined for possible states in the environment. Normal RL methodologies have been used as the learning processes of (cMAS) agents through trial-and-error interactions in a dynamic environment.

In PTL, each agent will broadcast its knowledge to all other agents while deciding whose knowledge to accept based on the reward received from other agents vs. expected rewards it predicts. Nevertheless, agents in this approach tend to infer incorrect actions on unseen circumstances or complex environments.

However, for more complex or changing environments, it is necessary to endow the agents with intelligence capable of adapting to an environment’s dynamics. A complex environment, almost by definition, implies complex interactions and necessitated learning of MAS, which current RL methodologies are hard-pressed to meet. A more recent machine learning paradigm of Transfer Learning (TL) was introduced as an approach of leveraging valuable knowledge from related and well studied problem domains to enhance problem-solving abilities of MAS in complex environments. Since then, TL has been successfully used for enhancing RL tasks via methodologies such as instance transfer, action-value transfer, feature transfer and advice exchanging (AE).

Most RL systems aim to train a single agent or cMAS. Evolutionary Transfer Learning framework (eTL) aims to develop intelligent and social agents capable of adapting to the dynamic environment of MAS and more efficient problem solving. It’s inspired by Darwin’s theory of evolution (natural selection + random variation) by principles that govern the evolutionary knowledge transfer process. eTL constructs social selection mechanisms that are modelled after the principles of human evolution. It mimics natural learning and errors that are introduced due to the physiological limits of the agents’ ability to perceive differences, thus generating “growth” and “variation” of knowledge that agents have, thus exhibiting higher adaptability capabilities for complex problem solving. Essential backbone of eTL comprises of memetic automaton, which includes evolutionary mechanisms such as meme representation, meme expression, etc.

Memetics

The term “meme” can be traced back to

Dawkins’ “The Selfish Gene”, where he defined it as “

a unit of information residing in the brain and is the replicator in human cultural evolution.” For the past few decades, the meme-inspired science of

Memetics has attracted increasing attention in fields including anthropology, biology, psychology, sociology and computer science. Particularly, one of the most direct and simplest applications in computer science for problem solving has become

memetic algorithm. Further research of meme-inspired computational models resulted in concept of memetic automaton, which integrates memes into units of domain information useful for problem-solving. Recently, memes have been defined as transformation matrixes that can be reused across different problem domains for enhanced evolutionary search. As with genes serving as “instructions for building proteins”, memes carry “behavioural instructions,” constructing models for problem solving.

Memetics in eTL

Meme representation and meme evolution form the two core aspects of eTL. It then undergoes meme expression and meme assimilation. Meme representation is related to what a meme is, while meme expression is defined for an agent to express its stored memes as behavioural actions, and meme assimilation captures new memes by translating corresponding behaviours into knowledge that blends into the agent’s mind-universe. The meme evolution processes (i.e. meme internal and meme external evolutions) comprise the main behavioural learning aspects of eTL. To be specific, meme internal evolution denotes the process for agents to update their mind-universe via self learning or personal grooming. In eTL, all agents undergo meme internal evolution by exploring the common environment simultaneously. During meme internal evolution, meme external evolution might happen to model the social interaction among agents mainly via imitation, which takes place when memes are transmitted. Meme external evolution happens whenever the current agent identifies a suitable teacher agent via a meme selection process. Once the teacher agent is selected, meme transmission occurs to instruct how the agent imitates others. During this process, meme variation facilitates knowledge transfer among agents. Upon receiving feedback from the environment after performing an action, the agent then proceeds to update its mind-universe accordingly.

eTL implementation with learning agents

There are two implementations of learning agents that take the form of neurally-inspired learning structures, namely a

FALCON and a BP multilayer neural network. Specifically, FALCON is a natural extension of self-organizing neural models proposed for real-time RL, while BP is a classical multi-layer network that has been widely used in various learning systems.

- MASs with TL vs. MAS without TL: Most TL approaches outperform cMAS. This is due to TL endowing agents with capacities to benefit from the knowledge transferred from the better performing agents, thus accelerating the learning rate of the agents in solving the complex task more efficiently and effectively.

- eTL vs. PTL and other TL approaches: FALCON and BP agents with the eTL outperform PTL and other TL approaches due to the reason that, when deciding whether to accept information broadcasted by the others, agents in PTL tend to make incorrect predictions on previously unseen circumstances. Further, eTL also demonstrates superiority in attaining higher success rates than all AE models thanks to meme selection operator of eTL, which considers a fusion of the “imitate-from-elitist” and “like-attracts-like” principles so as to give agents the option of choosing more reliable teacher agents over the AE model.

Conclusions

While popularisation of RL is traced back to Edward Thorndike and Marvin Minsky, it’s been inspired by nature and present with us humans since ages long gone. This is how we effectively teach children and want to now teach our computer systems, real (neural networks) or simulated (MAS).

RL reentered human consciousness and rekindled our interest again in 2016 when AlphaGo beat Go champion Lee Sedol. RL has, via its currently successful PGs, DQNs and other methodologies, already contributed and continues to accelerate, turn more intelligent and optimise humanoid robotics, autonomous vehicles, hedge funds, and other endeavours, industries and aspect of human life.

However, what is that optimises or accelerates RL itself? Its new frontiers represent PTLs, Memetics and a holistic eTL methodology inspired by natural evolution and spreading of memes. This latter evolutionary (and revolutionary!) approach is governed by several meme-inspired evolutionary operators (implemented using FALCON and BP multi-layer neural network), including meme evolutions.

The performance efficacy of eTL seems to have outperformed even most state-of-the-art MAS TL systems (PTL).

What future does RL hold? We don’t know. But the amount of research resources, experimentation and imaginative thinking will surely not disappoint us.